WSAI 2018 - Amsterdam - The Age of AI Innovation

After 2 days spent in Amsterdam, for the 2nd edition of the World Summit AI, SQLI gives you an overview of the conferences along with some interesting insights.

Many companies have already embarked on AI projects, so what are you waiting for? Technologies are now mature and available, and we can prototype quickly for less money. But before kick-off, you must identify a clear and measurable goal based on your business metrics. Don’t be afraid, you don’t need a lot of data to create models, iterate on your code or test and learn about yours models. So let’s go! Collect data, create models, don’t forget to train and retrain yours models and keep in mind that your AI is not a black box, understand and challenge it.

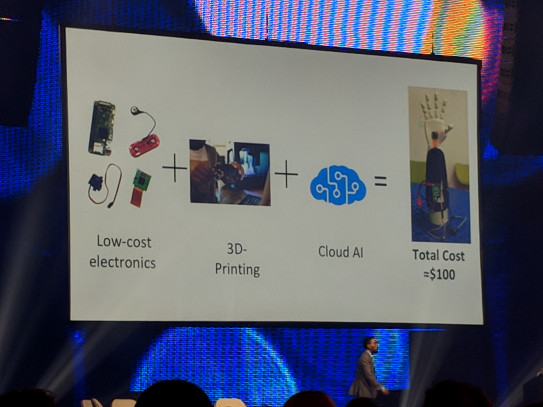

A Cloud AI service behind every device

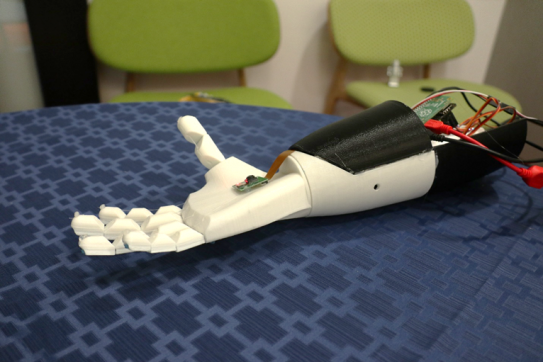

Joseph Sirosh, CTO AI at Microsoft, introduced us to the work of 2 students (Samin Khan and Hamayal Choudhry from the University of Toronto) initiated during a Hackathon (Microsoft Imagine Cup 2018). Thanks to their skills in computer vision, machine learning, and mechatronics, they might just be able to create a “SmartArm” product designed to have an impact on the world, for less than $100.

Figure 1. smartarm prototype

So, how does the connected arm work? The camera placed on the prototype continuously captures live video of the view in front of the palm of the hand. This video feed is processed by an onboard Raspberry Pi and computed with an auto/ML engine, developed on Microsoft Azure’s Machine Learning Studio, to estimate the object’s 3D geometric shape. Various common grips associated with different shapes are stored in memory.

Once the shape is determined, the prototype selects the corresponding common grip that would allow the user to properly interact with the object. Because the prototype is cloud compatible, its capabilities are not limited to the initially loaded model and pre-calculated grips on-board. Over time, the arm can learn to recognize different types of shapes and perform a wider variety of grasps without having to make changes to the hardware.

In addition, users have the option of training the arm to perform new grasps by sending video samples to the cloud with the corresponding finger positions of the desired grip.

Figure 2. microsoft ai - connected arm

More info: https://www.youtube.com/watch?v=XCwwspwXD9Q&t=316s

Takeaway: the combination of the decrease in the price of hardware and the increase in the AI offer in the Cloud (you no longer have to develop your own AI, you can serve yourself on the Cloud), leads us to think that we will have more and more connected devices doped with AI in order to make our day-to-day life easier and accelerate and automatize processes.

The AI model must optimise a relevant Business metric

Figure 3. pandora – oscar celma (head of ai)- 5 lessons learned

The second insight we would like to share came from Oscar Celma, Head of AI at Pandora, who presented his findings based on building a large recommender system. He shared the following 5 lessons:

#1 Implicit user signals are more useful than explicit ones

#2 Do not always choose the most accurate model

#3 Models should optimize a relevant business metric

#4 Experimentation and production models should be the same

#5 Domain expertise is fundamental in a ML/AI team

We retained #3 “Models should optimize a relevant business metric” that is certainly the most important today. So, what did Oscar Celma wish to share? Simply the need to choose your business KPI before launching an AI initiative, in order to maximize the impact of your AI model on business revenue. In the example he shared, they focus on “user life-time value” (most important for a business of course!) by analysing historical data of a music streaming platform to evaluate the AI model and then to predict activity (short-term: like, skips, listen…) and retention (long-term: time spent, active days…) metrics.

Takeaway: the potential of AI models is so vast, but still complex to put in place on the business value chain, therefore you must focus on the main business metrics.

Train the models

The training of an AI model is like training for an athlete, it ensures high performance. During the World Summit AI, we discovered different ways to train models.

Figure 4. unity - ml-agents toolkit

Unity has developed a virtual environment and a ML-Agents’ toolkit to automatize the training of AI models. Danny Lange, VP of AI at Unity Technologies, explained the “reinforcement learning” principle to us where an ML-Agent collects observations about the state of the environment, performs an action, and gets a reward for that action. To illustrate this concept, he presented “Puppo” an alternative framework to define the behaviour of an NPC (Non-Player Character) in a video game.

Training an NPC using reinforcement learning is quite similar to how we train a puppy to play fetch. In this case, the environment is the game scene and the agent is Puppo. We present the puppy with a treat and then throw the stick. At first, the puppy wanders around not sure what to do, until it eventually picks up the stick and brings it back, promptly getting a treat. After a few sessions, the puppy learns that retrieving a stick is the best way to get a treat and continues to do so. That is precisely how reinforcement learning works in training the behaviour of a NPC.

We provide our NPC with a reward whenever it completes a task correctly. Through multiple simulations of the game (the equivalent of many fetch sessions), the NPC builds an internal model of what action it needs to perform at each instance to maximize its reward, which results in the ideal, desired behaviour. For more info: https://www.youtube.com/watch?v=OxR1ZFFPnro

Takeaway: Unity, with this toolkit, offer an interesting alternative to automatizing the training of our models for game development.

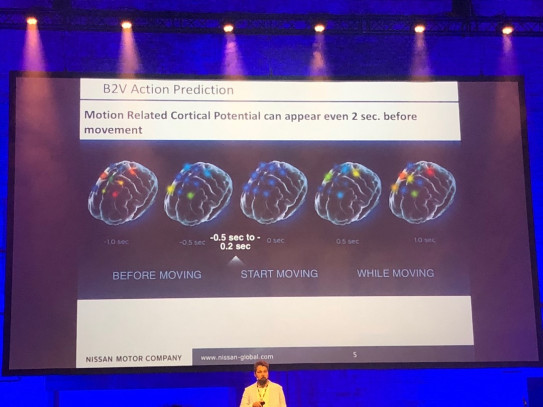

In a Tech Talk, Dr. Lucian Georghe, Senior Innovation Research at Nissan, introduced a new technology, Brain-to-Vehicle, developed by Nissan Intelligent Mobility, to help a car with autonomous capabilities to execute evasive manoeuvres faster using brain wave technology. By recognizing whether a driver is about to brake, swerve, or perform some other evasive move, Nissan says that this “brain-to-vehicle” interface could help a car begin those actions between 0.2 and 0.5 seconds faster.

Brain-to-Vehicle technology is based on activity in advance of intentional movement (e.g. steering), known as movement-related cortical potential (MRCP), and activity that reveals the variance between what the driver expects and what they are experiencing (e.g. car moving too fast for comfort), known as error-related potentials (ErrP). This brainwave activity is measured using a skullcap worn by the driver (a headset dotted with electrodes) and analysed and interpreted for immediate implementation by onboard autonomous systems.

- In autonomous driving mode, by detecting and evaluating driver discomfort, artificial intelligence can alter the driving configuration or driving style to make the driver feel at ease.

- When in manual driving mode, by identifying signs that the driver's brain is about to initiate a movement - such as turning the steering wheel or pushing the accelerator pedal - driver assist technologies can begin the action more quickly.

Figure 5. nissan - brain-to-vehicle

More info: https://www.youtube.com/watch?v=oCi6taII6Yo

Takeaway: this is another way to implement reinforcement learning, using the brainwaves as observations, the driver manoeuvres as actions and driving pleasure as the reward.

Better understand and challenge IA

As Dr. Evert Haasdijk, Senior Manager and AI Expert at Deloitte explained at the beginning of Day 2, we need to have an explainable AI to combat bias and inequality (to avoid algorithms that amplify gender stereotypes), to understand when one can (and cannot) rely on models, to explain decisions or predictions. One response for him to achieve transparency, is to identify and inspect models, perform statistical analysis and explain approximations.

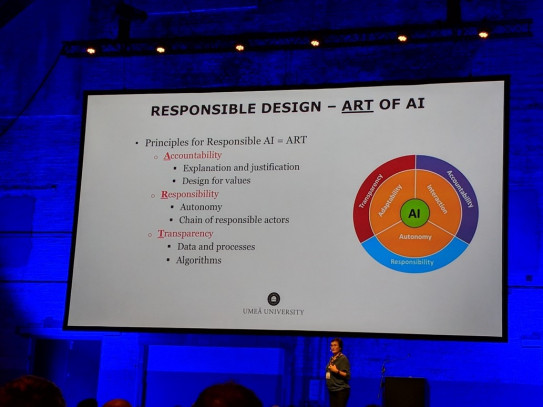

Figure 6. umeå university - the art of ai

Later in the day, Virginia Dignum, Chair of Social and Ethical Artificial Intelligence, Umeå University, described responsible design of AI around 3 principles (The ART of AI).

- Accountability: design algorithms for values through norms (law, policy…), societal and ethical implications

- Responsibility: ensure integrity of stakeholders and stakes

- Transparency: combat opacity of data, process, assumptions, approximations and algorithm models

Takeaway: technology is now mature, but the next challenge will be to explain the results of all AI available in the Cloud in order to ensure trust in your application.